import podonos

import os

from podonos import *

from pydub import AudioSegment

from pydub.generators import WhiteNoise

def add_noise(sound, noise_level=0.005):

noise = WhiteNoise().to_audio_segment(duration=len(sound))

noisy_sound = sound.overlay(noise)

return noisy_sound

client = podonos.init()

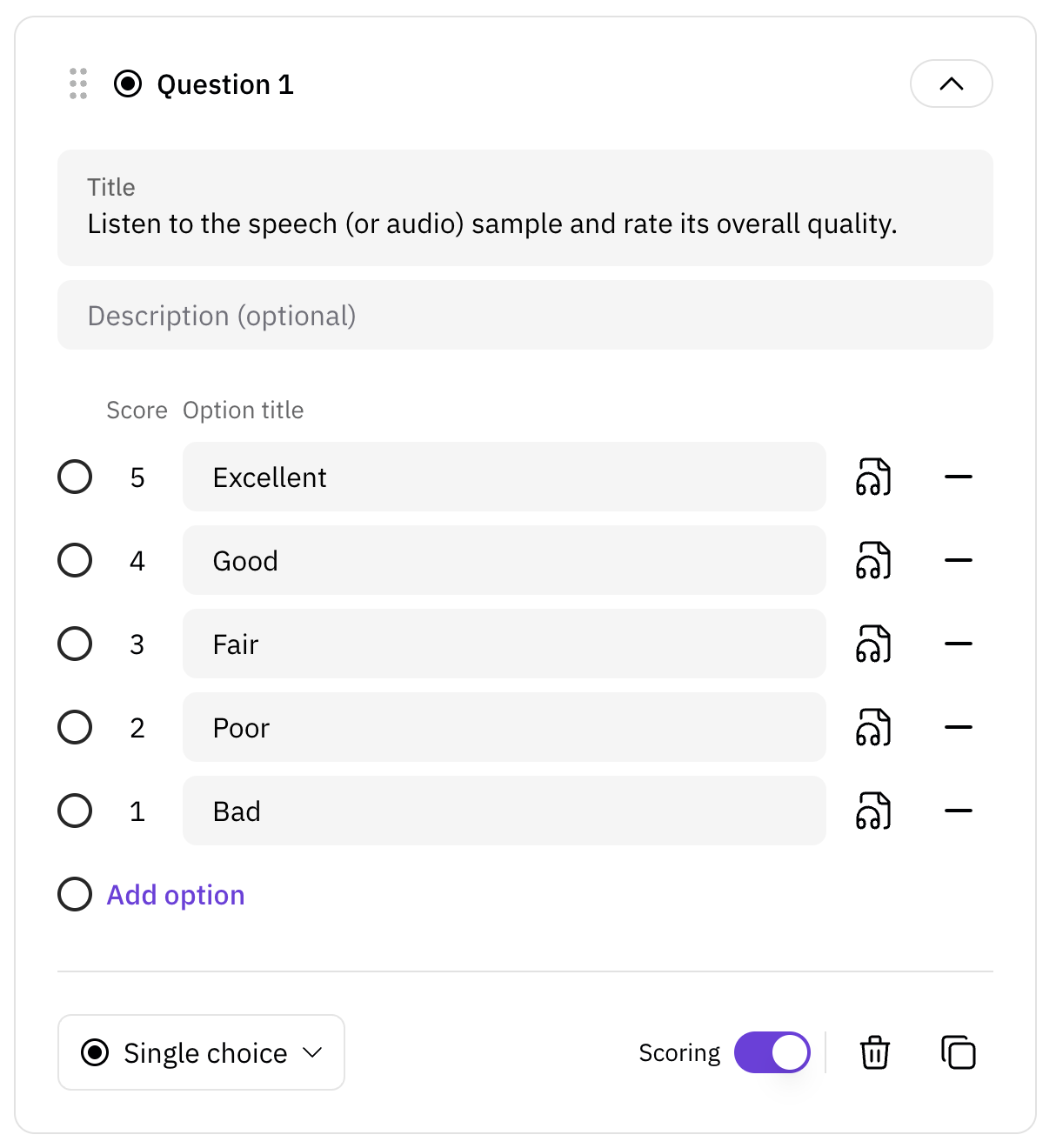

etor = client.create_evaluator(

name="Quality evaluation with/without additive noise",

desc="Noise is added to the clean audio",

type="QMOS", lan="de-de", num_eval=7)

src_folder = "./src"

dst_folder = "./dst"

files = os.listdir(src_folder)

for name in files:

noisy_sound = add_noise(name)

noisy_sound.export(os.path.join(dst_folder, name), format="wav")

# Add files

etor.add_file(File(path=os.path.join(src_folder, name), model_tag='Original',

tags=["original"]))

etor.add_file(File(path=os.path.join(dst_folder, name), model_tag='Noisy',

tags=["level 0.005"]))

etor.close()